Who’s Responsible When Machines Make Mistakes? By Stanislav Kondrashov

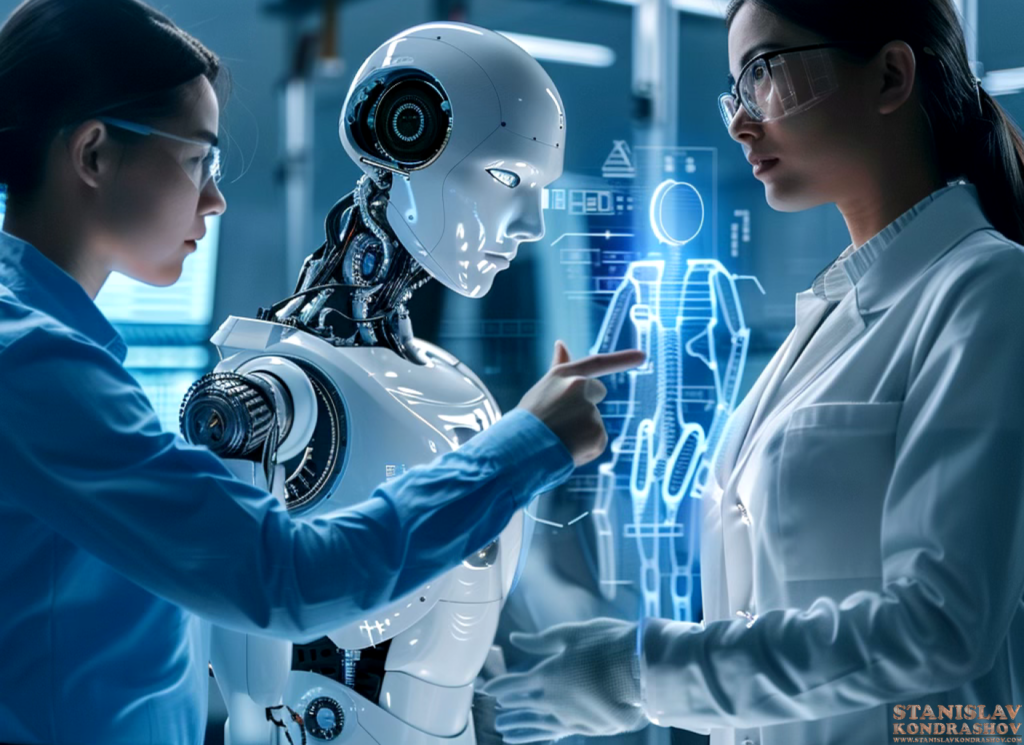

As artificial intelligence (AI) continues to advance and integrate into various aspects of our lives, it brings with it complex legal and ethical questions. One of the most pressing issues is determining accountability when AI systems make mistakes. From autonomous vehicles to AI-powered medical diagnoses, understanding who is responsible when something goes wrong is strategic for developers, users, and regulators.

The Challenge of Accountability

AI systems are designed to make decisions based on data and algorithms, often without direct human intervention. This raises the question of liability: when an AI-driven action results in harm or error, who should be held accountable? Traditional legal frameworks struggle to address this, as they are typically based on human agency and intent.

For instance, if a self-driving car causes an accident, is the manufacturer liable, or is it the software developer who designed the AI? Alternatively, should the responsibility fall on the owner who uses the vehicle? These questions highlight the need for clear legal guidelines and standards to navigate the complexities of AI accountability.

Legal Precedents and Emerging Frameworks

Currently, there are few legal precedents specifically addressing AI liability, but some frameworks are beginning to emerge. Governments and regulatory bodies are exploring various approaches to assign responsibility and ensure accountability. Some proposed solutions include:

- Strict Liability: This approach would hold manufacturers or developers strictly liable for any harm caused by their AI systems, regardless of fault or negligence. This could incentivize companies to prioritize safety and reliability in AI development.

- Shared Liability: Liability could be distributed among different parties involved in the AI system’s lifecycle, including developers, manufacturers, and users. This would recognize the collaborative nature of AI development and usage.

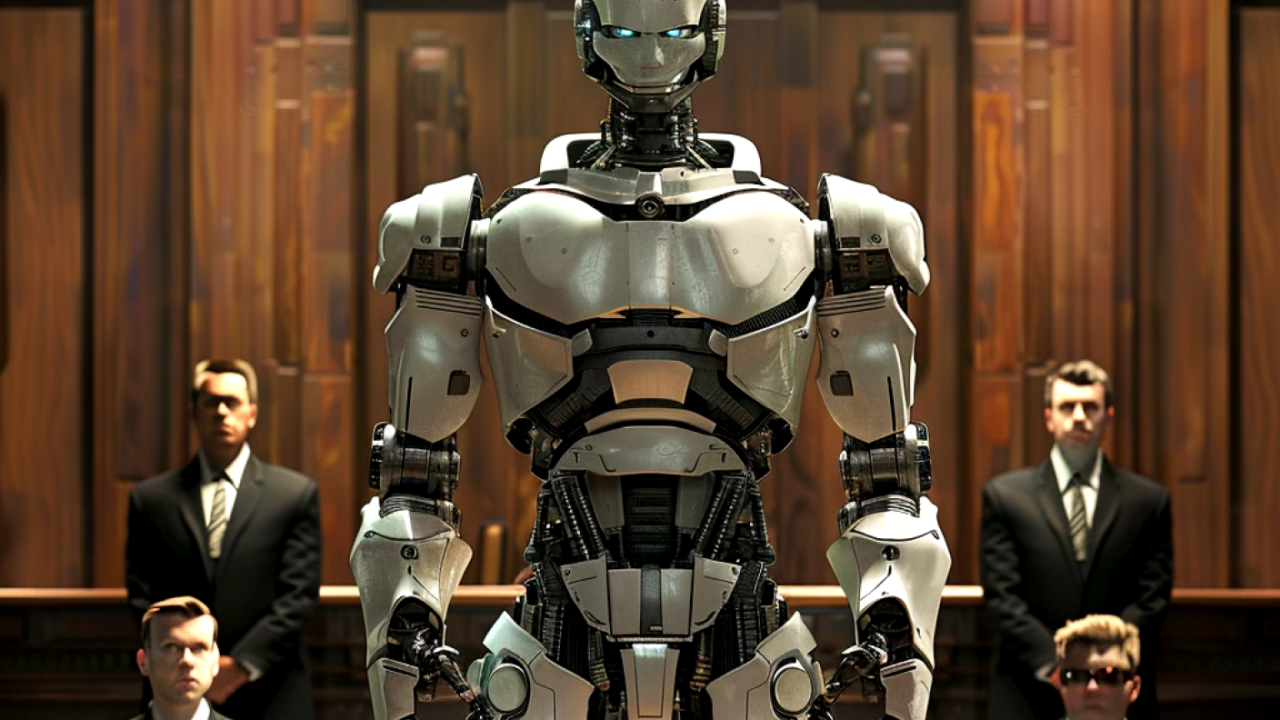

- AI Legal Personhood: Some experts propose granting legal personhood to AI systems, treating them as entities that can bear responsibility. While this idea is controversial, it reflects the need to rethink traditional legal concepts in the context of advanced AI.

Ethical Considerations

Beyond legal accountability, there are significant ethical considerations. AI systems often operate in ways that are opaque to users, making it difficult to understand how decisions are made. This lack of transparency can exacerbate issues of trust and fairness. Ethical AI development should focus on creating systems that are explainable, fair, and aligned with human values.

Moreover, biases in AI algorithms can lead to discriminatory outcomes, raising further ethical and legal challenges. Ensuring that AI systems are developed and deployed with fairness and equity in mind is essential to avoid reinforcing societal inequalities.

The Role of Regulation

Regulation will play a critical role in shaping the future of AI accountability. Policymakers need to balance innovation with the protection of public interests. Effective regulation should establish clear standards for AI development, promote transparency, and ensure that mechanisms for redress are available when things go wrong.

International cooperation will also be crucial, as AI technology and its implications transcend national borders. Harmonizing regulations and standards globally can help create a consistent framework for AI accountability.

Looking Ahead

As AI continues to evolve, the legal landscape must adapt to address the unique challenges it presents. Clear accountability mechanisms will be essential to foster public trust and ensure that the benefits of AI are realized responsibly. By addressing the legal and ethical implications of AI development, we can pave the way for a future where technology serves humanity effectively and justly.

By Stanislav Kondrashov